Welcome to the Mediate Metrics inaugural TV news political slant measurement report, based on our version 1.0 text classifier.

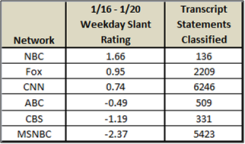

To our knowledge, this is the first objective TV news slant rating service ever published. Slant, by our definition, is news containing an embedded statement of bias (opinion) OR an element of editorial influence (factual content that reflects positively or negatively on a particular U.S. political party). This initial report focuses specifically on evaluating slant contained in the weekday transcripts of the national nightly news programs on the 3 major broadcast networks (ABC, CBS, and NBC) , as well as programming aired from 5 PM until 11 PM eastern time on top 3 cable news channels (CNN, Fox, and MSNBC). At this stage, analytical coverage varies by network, program, and date, but our intention is to fill in the blanks over time.

In keeping with U.S. political tradition, content favoring the Republican party in Chart 1 is portrayed in red (positive numbers), while content that tilts towards the Democratic Party is shown in blue (negative numbers)

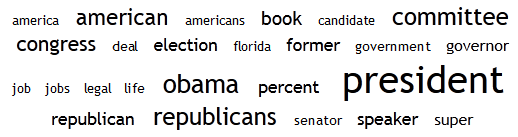

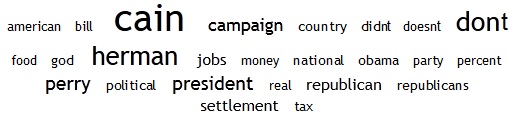

To grossly over-simplify, the numerical slant ratings supporting the Chart 1 emanate from a custom text analysis “classifier,” built to extract statements of political slant from TV news transcripts. (For more on the underlying technology, see our post on Text Analytics Basics at http://wp.me/p1MQsU-at.) We have trained our classifier to interpret slant quite conservatively, conforming to strict guidelines for the sake of consistency and objectivity. As such, the ratings we present may be perceived as under-reporting the absolute slant of the actual content under review, but the appropriate way to view our ratings is as relative to similar programming.

As mentioned, our analytical coverage varies by network, program, and date. Correspondingly, our rating confidence is directly proportional to the amount of transcript text available for classification.The exact amount of coverage per network is shown in the table to the right, but we have graphically indicated depth-of-coverage in Chart 1 by way of color shading. For example, the bars representing the slant ratings for both NBC and CBS were purposely made lighter to reflect the relatively small transcript coverage for those particular networks.

our analytical coverage varies by network, program, and date. Correspondingly, our rating confidence is directly proportional to the amount of transcript text available for classification.The exact amount of coverage per network is shown in the table to the right, but we have graphically indicated depth-of-coverage in Chart 1 by way of color shading. For example, the bars representing the slant ratings for both NBC and CBS were purposely made lighter to reflect the relatively small transcript coverage for those particular networks.

During development, we determined that the Republican presidential primaries are an enterprise for which scrutiny is a normal-and-valuable part of the vetting process. Related news content, however, tends to be disproportionately negative, and often times does not contain a clear inter-party comparison — an element we view as a crucial condition for the evaluation of political slant. With those factors mind, we have partitioned statements about the Republican Presidential primaries, and have excluded them from most slant ratings at this juncture. Similarly, the Republican Presidential debates and other such dedicated program segments have been excluded in their entirety from classification since they do not reflect the political positions of the networks, programs, or contributors under a consideration.

We’ll publish slant ratings by program for the same January 16 – 20 time period tomorrow.